MINERVAS: Massive INterior EnviRonments VirtuAl Synthesis

News

- 2022-08: The MINERVAS System is accepted to Computer Graphics Forum, Pacific Graphics 2022!

- 2021-07: The MINERVAS System is available online!

Abstract

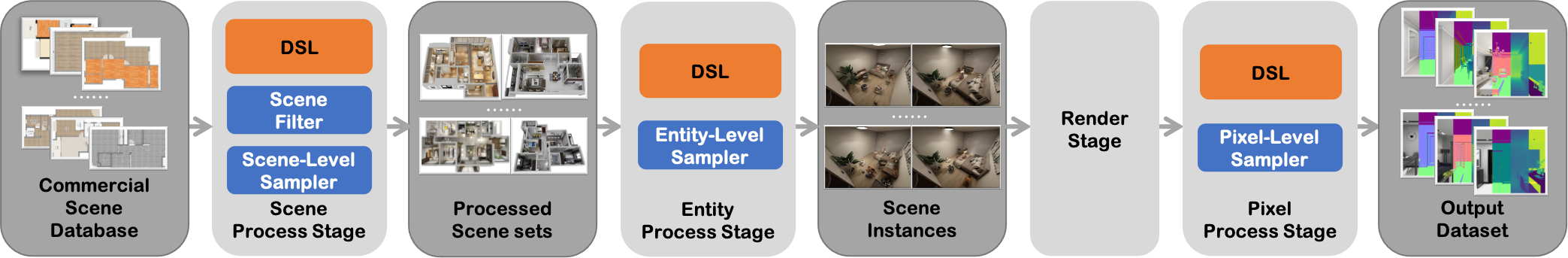

With the rapid development of data-driven techniques, data has played an essential role in various computer vision tasks. Many realistic and synthetic datasets have been proposed to address different problems. However, there are lots of unresolved challenges: (1) the creation of dataset is usually a tedious process with manual annotations, (2) most datasets are only designed for a single specific task, (3) the modification or randomization of the 3D scene is difficult, and (4) the release of commercial 3D data may encounter copyright issue. This paper presents MINERVAS, a Massive INterior EnviRonments VirtuAl Synthesis system, to facilitate the 3D scene modification and the 2D image synthesis for various vision tasks. In particular, we design a programmable pipeline with Domain-Specific Language, allowing users to (1) select scenes from the commercial indoor scene database, (2) synthesize scenes for different tasks with customized rules, and (3) render various imagery data, such as visual color, geometric structures, semantic label. Our system eases the difficulty of customizing massive numbers of scenes for different tasks and relieves users from manipulating fine-grained scene configurations by providing user-controllable randomness using multi-level samplers. Most importantly, it empowers users to access commercial scene databases with millions of indoor scenes and protects the copyright of core data assets, e.g., 3D CAD models. We demonstrate the validity and flexibility of our system by using our synthesized data to improve the performance on different kinds of computer vision tasks.

Online System

We provide a free trial account for each user with the limited scene and machine time, you can sign up here. If you would like to use our system for research purposes, please send the Terms of Use to MINERVAS Group. Once receiving the agreement form, our group will contact you.

DSL Examples

MINERVAS system allows users to control the data generation pipeline via Domain Specific Language (DSL). The DSL is designed with flexibility and ease of use. For flexibility, we build our DSL as an internal DSL under the Python programming language. For ease of use, we provide several common samplers for users to easily generate diverse scenes for domain randomization.

Here we show some examples of our DSL and generated results. We only show the built-in samplers here. For more information about DSL, please refer to Document.

Furniture Rearrangment

class FurnitureLayoutSampler(SceneProcessor):

def process(self):

for room in self.shader.world.rooms:

room.randomize_layout(self.shader.world)

Material Sampler

class MaterialSampler(EntityProcessor):

def process(self):

for instance in self.shader.world.instances:

if instance.label in [247, 894]: # 247: 'chair', 894: 'desk'

self.shader.world.replace_material(id=instance.id)

Light Sampler

class LightSampler(EntityProcessor):

def process(self):

for light in self.shader.world.lights:

light._tune_temp(1) # randomize color temperature

light.tune_intensity(1) # randomize intensity

light.tune_random(1.2) # randomize intensity

Model Sampler

class ModelSampler(EntityProcessor):

def process(self):

for instance in self.shader.world.instances:

if instance.type == 'ASSET':

self.shader.world.replace_model(id=instance.id)

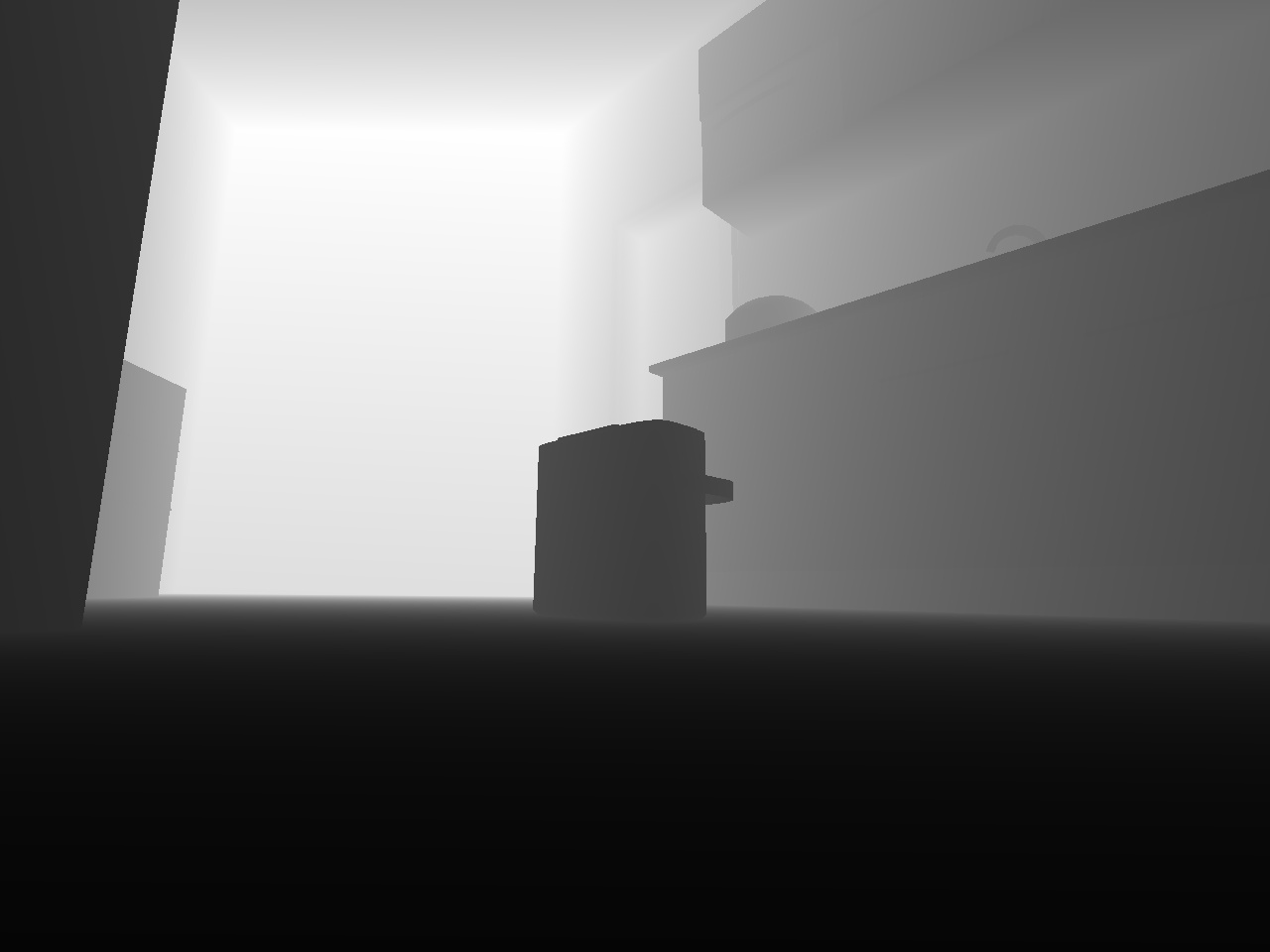

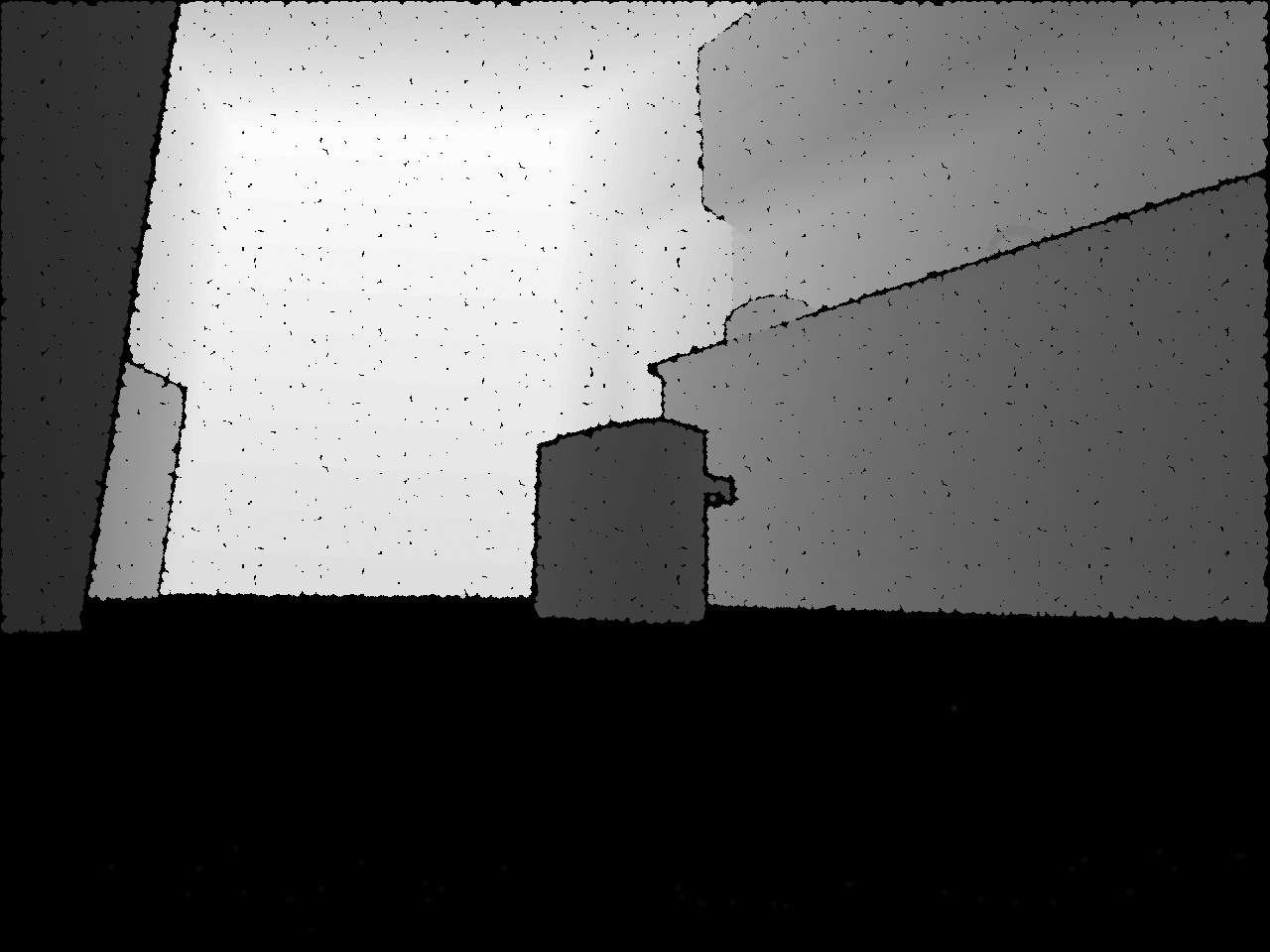

Depth sampler

class DepthNoiseSample(PixelProcessor):

def process(self):

# 0: NoNoiseModel

# 1: GaussianNoiseModel

# 2: PoissonNoiseModel

# 3: SaltAndPepperNoiseModel

# 4: KinectNoiseModel

self.gen_depth(noise=4)

Acknowledgements

We would like to thank Manycore Tech Inc. (Coohom Cloud, Kujiale) for providing the 3D indoor scene database and the high-performance rendering platform, especially Coohom Cloud Team for technical support.